Medical and physician associate (PA) programs have increased clinical exposure by expanding standardized patient encounters, investing in simulation, and redesigning clerkships.

But more exposure has not produced more consistent preparation. In one survey, nearly 68% of physician associate educators report deficiencies in students’ differential diagnosis proficiency. In another survey of U.S. internal medicine clerkship directors, 84% of students were rated as having poor (29%) or fair (55%) clinical reasoning when entering the clerkship.

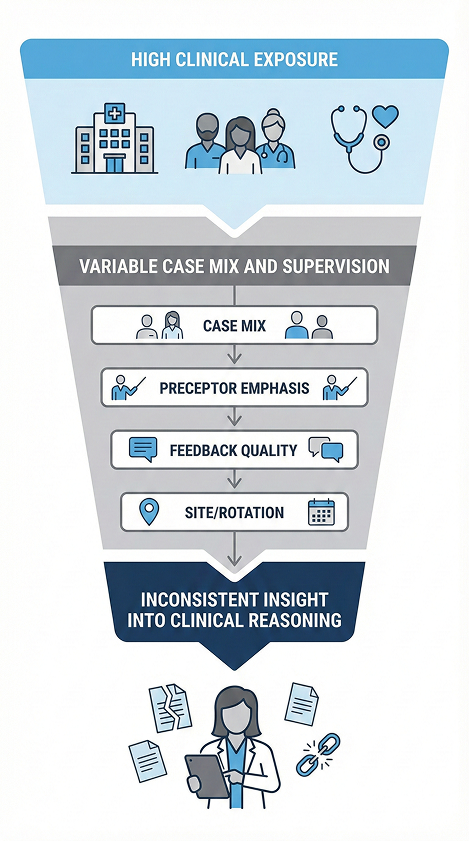

Despite rotating across different sites, services, and preceptors—and participating in simulations, Objective Structured Clinical Examinations (OSCEs), and standardized patient (SP) encounters—learners often lack consistent assessment of their clinical reasoning because of what patients they encounter.

This variability makes readiness difficult to assess and costly to address. Faculty spend significant time reconstructing student reasoning after the fact, reconciling uneven evaluations, and remediating gaps late in training. When exposure and assessment are inconsistent, differences in performance can reflect opportunity rather than ability, leaving educators without a reliable, efficient way to compare learners, track progress over time, or intervene early.

AI-enabled simulation brings assessment earlier and standardizes how learners are evaluated, cutting down on late-stage remediation and manual review.

Read more to explore how AI-powered simulation-based assessment can reduce faculty effort while enabling earlier, more consistent assessment of clinical reasoning.

Uneven exposure creates uneven insight

Clinical exposure has increased across training programs, yet insight into learner readiness remains inconsistent. With varying exposure to clinical scenarios and levels of diagnostic complexity, differences in student performance reflect opportunity rather than comparable experience.

What uneven exposure looks like in practice:

- Learners in the same cohort encounter very different chief complaints, acuity levels, and diagnostic complexity

- Some learners regularly engage in diagnostic uncertainty, hypothesis generation, and refinement

- Others have limited opportunities to practice reasoning because cases are stable, protocol-driven, or predictable

Why this creates an insight gap:

- Performance differences often reflect opportunity, not ability

- Faculty cannot reliably compare learners who have faced different clinical demands

- Reasoning gaps stay hidden until core rotations, when remediation is harder and more resource-intensive

The result is limited early visibility into clinical reasoning, delayed identification of gaps, and increased faculty burden during late-stage training. This reflects a structural limitation of current training models.

Why traditional evaluation methods cannot overcome variability

Medical and PA programs have long relied on exams, OSCEs, SP encounters, and preceptor evaluations to assess readiness. While each method plays an important role, they do not consistently surface clinical reasoning. For example, in one survey, only 42.7% of students reported that preceptor evaluations gave them insight into their clinical reasoning, reflecting the limits of episodic, context-dependent assessment rather than the quality of instruction itself.

Tool limitations include:

- Written assignments: measure knowledge, not reasoning processes

- OSCEs: assess communication and structured tasks, but often capture outcomes rather than diagnostic pathways

- SPs: high fidelity but expensive (upwards of $900 per student) and capacity-limited, and feedback quality varies depending on SP training and faculty involvement

- Simulations: expensive and access-limited, making diagnostic reasoning difficult to observe consistently

Evaluator variability:

- Assessments depend heavily on evaluator background and training, from SP-led OSCEs to faculty debriefing. One study finds that 36% of Pediatric Critical Care Medicine faculty “had not participated in any formal teaching skill development as faculty.”

- Preceptor evaluations are shaped by local case mix and individual interpretation

- Narrative feedback and video review are time-intensive, uneven, and often delayed

Together, these make it difficult to compare learners reliably or identify emerging concerns early.

Scale clinical exposure and reasoning with AI simulation

Simulation itself is not new in medical and PA education. What is new is the ability to use AI-driven simulation as a standardized, scalable assessment of clinical reasoning, not just an isolated learning activity.

AI simulation-based assessment addresses the core problem of uneven exposure by creating a controlled environment in which every learner encounters comparable diagnostic complexity and decision-making demands. Rather than relying on chance clinical encounters, programs can intentionally design when and how reasoning is observed.

What AI simulation makes possible:

- Standardized clinician developed, AI-powered case exposure, ensuring every learner engages with comparable diagnostic complexity regardless of rotation site or patient mix

- Consistent AI-enabled evaluation of clinical reasoning, driven by clear scoring criteria, structured prompts, and consistent rubric application aligned to competencies and milestones

- Early, comparable AI insight into readiness, that surface reasoning gaps early before learners enter high-stakes clinical environments

- AI OSCE-equivalent simulation up to 80% lower cost than traditional assessment methods, enabling scale without proportional increases in staffing or scheduling complexity

- Reduced faculty burden, by using AI to expand case exposure capture reasoning steps, and generate structured assessment data without requiring additional faculty review time

With DDx:

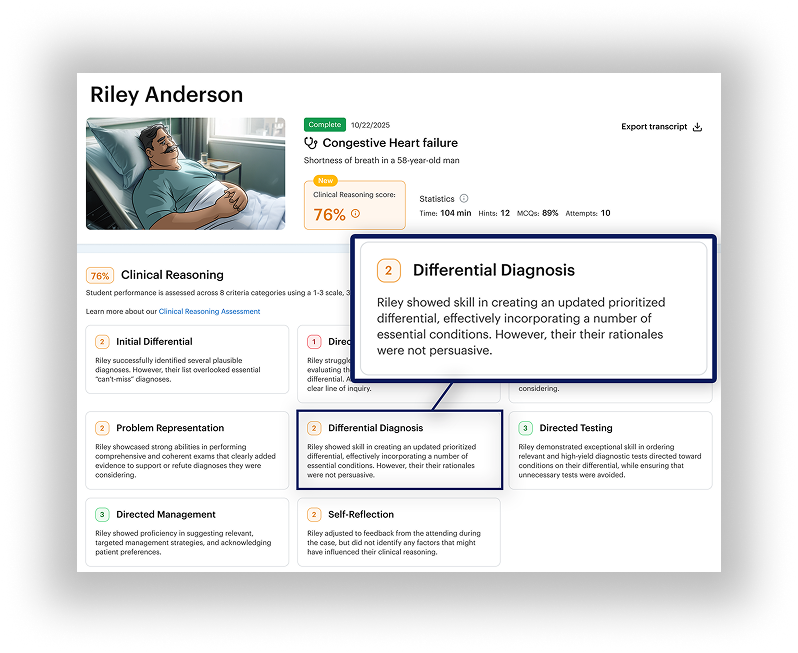

DDx is designed to bring consistency to clinical reasoning assessment while accommodating the distinct goals of medical and PA education. Medical programs require insight into diagnostic depth and decision-making under uncertainty, while PA programs prioritize broad exposure, adaptability, and readiness within accelerated timelines. By standardizing the cognitive demands learners encounter rather than the patients they happen to see, DDx allows programs to evaluate clinical reasoning fairly and comparably without constraining how or where learners train.

- Every learner reasons through comparable diagnostic complexity and uncertainty, regardless of rotation site or patient mix

- Clinical reasoning is evaluated at the level of judgment, prioritization, and decision-making, not exposure alone

- Exposure variability is neutralized, enabling consistent assessment across specialties, care settings, and program structures

- Readiness insight reflects shared cognitive demands rather than chance clinical encounters

And for faculty, they get key benefits without increasing costs or faculty workload. They are able to:

- Gain early insight into clinical reasoning across learners who have had different clinical experiences

- Customize cases to curricular priorities, milestones, and areas where exposure is known to be uneven

- Target support precisely, rather than relying on broad or late-stage remediation

Simulation-based assessment does not replace clinical education or SPs. Instead, it adds a standardized, repeatable view of clinical reasoning that strengthens existing training models.

A modern readiness model

DDx-centered, AI-enabled simulation enables this shift from chance exposure to shared benchmarks by standardizing how reasoning is observed. Learners work through the same diagnostic challenges, allowing faculty to evaluate how they generate, prioritize, and revise differentials, even when real-world experiences vary.

- Assign 200+ virtual patient encounters to match the curriculum

- See how learners think and evaluate clinical reasoning consistently across cohorts using a dedicated Clinical Reasoning Assessment system

- Scale assessment across cohorts without additional program cost or faculty burden

Because DDx is repeatable and scalable, programs gain early, comparable insight into clinical reasoning and can support readiness decisions with greater consistency, without increasing faculty burden.