Graduate Medical Education (GME) residency programs face a structural challenge when a new cohort of interns arrive with inconsistent levels of clinical experience.

As residents progress beyond orientation and rotate across services, they are expected to make safe decisions amid shifting acuity and workload, variable clinical exposure, and inconsistent supervision, even though assessment occurs at fixed intervals.

Intern bootcamps help establish a baseline through lectures, simulations, and skills refreshers, but current approaches don't thoroughly assess readiness. Without consistent longitudinal insight across rotations and clinical contexts, reasoning gaps throughout residency often go undetected until they require more intensive remediation.

This tension underscores a core need in residency training: early and consistent longitudinal visibility into how residents think, so programs can identify emerging gaps, support timely remediation, and track development across training.

The visibility gap: why episodic assessment misses risk

Most residency programs rely on episodic and retrospective assessments to evaluate readiness. They capture performance at a specific moment in time, often under ideal conditions.

Episodic and retrospective assessment like:

- Orientation assessments

- In-Training Exam (ITE)

- Simulation exercises

- Morning report

- Milestone evaluations

- End-of-rotation feedback

But they answer a limited question: can this resident perform under ideal, structured conditions at a single moment in time?

In addition to variability in evaluation depth and quality driven by changing supervisors and clinical settings, these assessments fail to capture how residents reason over time, with new information, across contexts, and under cognitive load. Gaps often remain hidden until residents face real clinical pressure, like night shifts with multiple patients, incomplete information, fatigue, and limited supervision.

When they do appear, these struggles show up as:

- Delayed recognition of deterioration

- Incomplete differentials

- Missed red flags

- Uncertainty about when to escalate

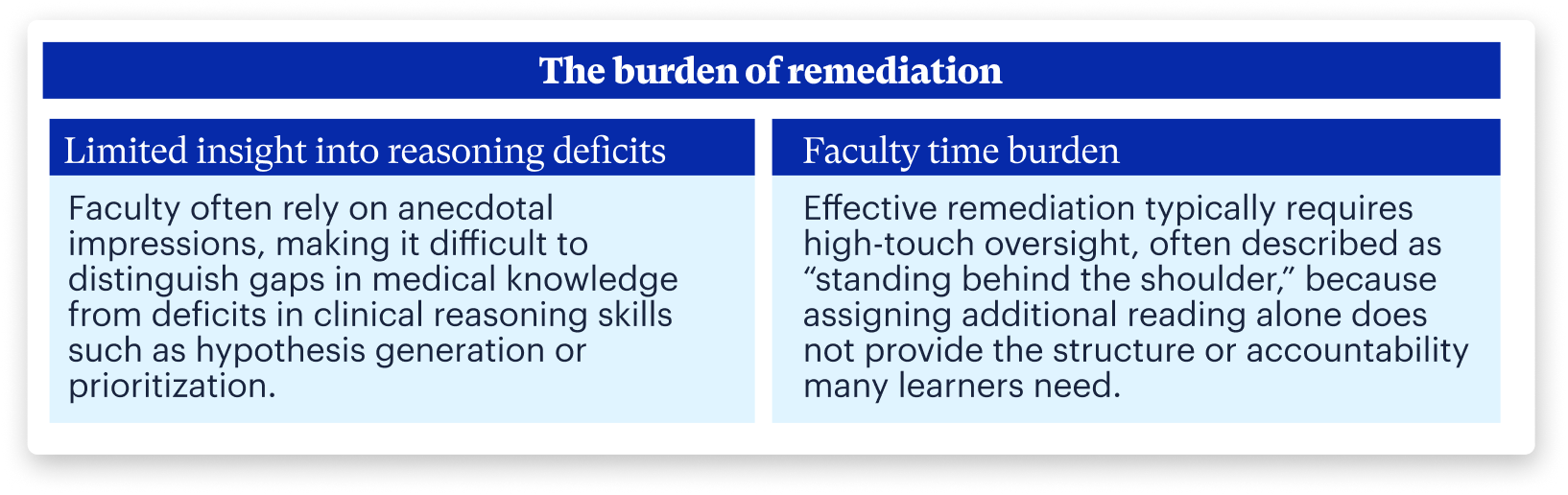

The hidden cost of reactive remediation

When reasoning gaps surface late, residency programs are pushed into reactive remediation, rapidly increasing faculty time, effort, and stress, yet many programs still lack tools to identify who needs support and why early enough to intervene efficiently.

Faculty consistently describe remediation as the largest gap with the fewest resources. Evidence from residency remediation programs shows that once concerns surface, remediation becomes both intensive and prolonged, requiring an average of approximately 45 hours of faculty and administrative time per learner and often unfolding over 6 to 12 months alongside ongoing clinical duties.

The cost of late detection extends beyond time. It increases cognitive load for faculty, delays learner support, and raises patient safety concerns, making high-touch remediation unsustainable as a primary strategy for addressing emerging or intermittent gaps.

The Solution: Standardizing the "bread and butter"

If the core problem is invisible reasoning gaps, the solution alongside more simulation is standardized, scalable exposure to the decisions that matter most. This begins with standardized onboarding that establishes a baseline for clinical reasoning, clinical skills, and communication. Beyond onboarding, programs need ways to support growth over time and scalable remediation.

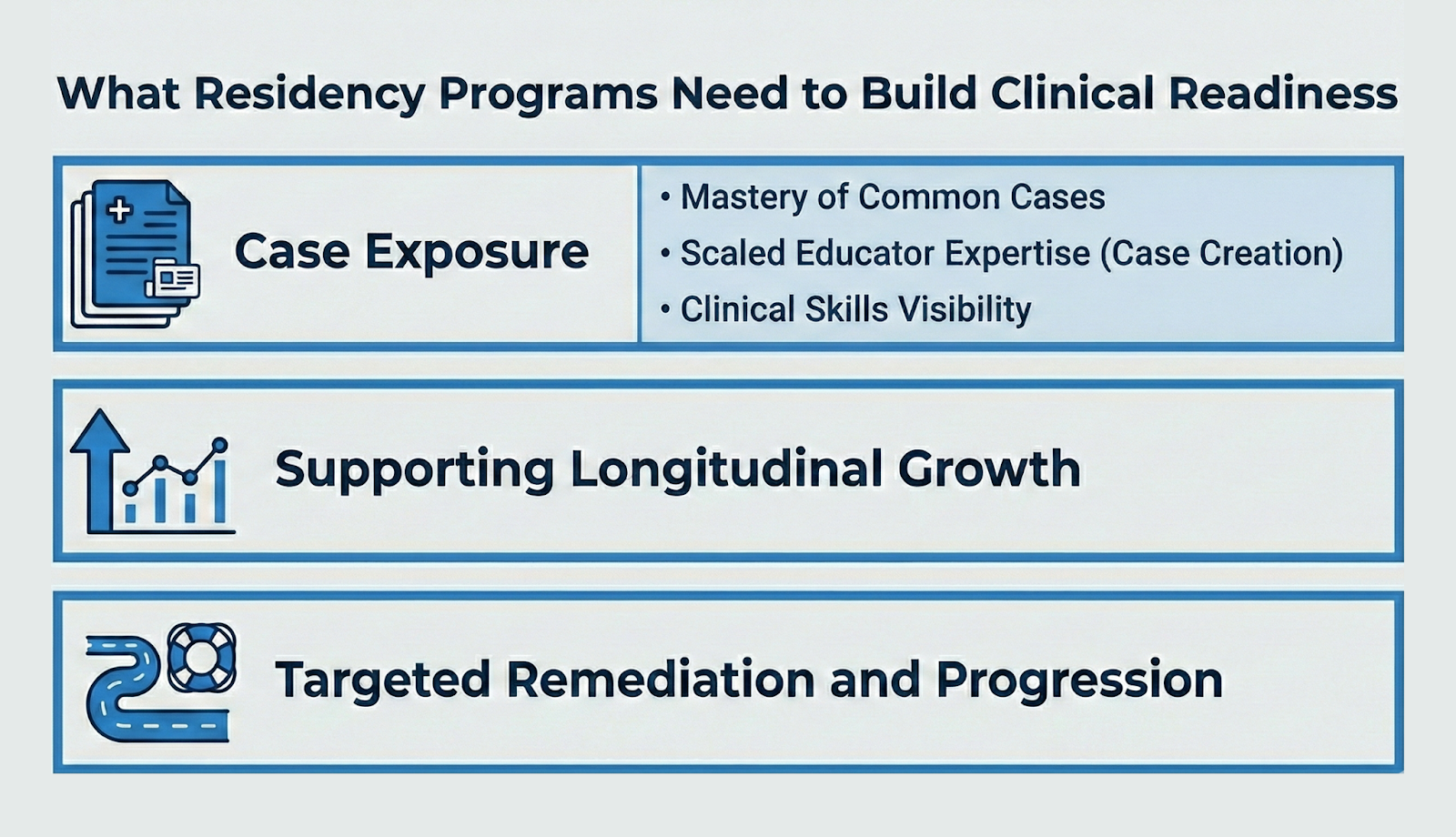

What residency programs need:

- Case exposure

- Mastery of the common cases: While complex or rare cases are intellectually engaging, true intern readiness relies on common cases. A scalable library allows programs to ensure every learner encounters core pathologies early in their training, regardless of rotation schedule or patient mix.

- Scaling educator expertise (case creation): Educators frequently possess specific teaching pearls they want to leverage but cannot deliver consistently to every resident. Case creation tools allow programs to digitize and extend these scenarios to all residents.

- Making "clinical skills" visible: Virtual simulation enables programs to address comprehensive or highly specific skill gaps based on individual resident needs, offering safe, repeatable practice for clinical skills (e.g., EKG interpretation, POCUS) and communication skills (e.g., breaking bad news, hand-offs) that are difficult to teach through lectures alone.

- Supporting longitudinal growth: Educators need a way to thread learning throughout residency, allowing residents to revisit core concepts with increasing complexity. Repeating cases over time allows programs to track the maturation of clinical judgment beyond episodic snapshots.

- Targeted remediation and progression: By making skill gaps visible early and consistently throughout residency and providing targeted case-based practice, programs can support structured, longitudinal remediation and readiness for independent clinical practice, while reducing faculty workload.

A shift toward scalable visibility with AI

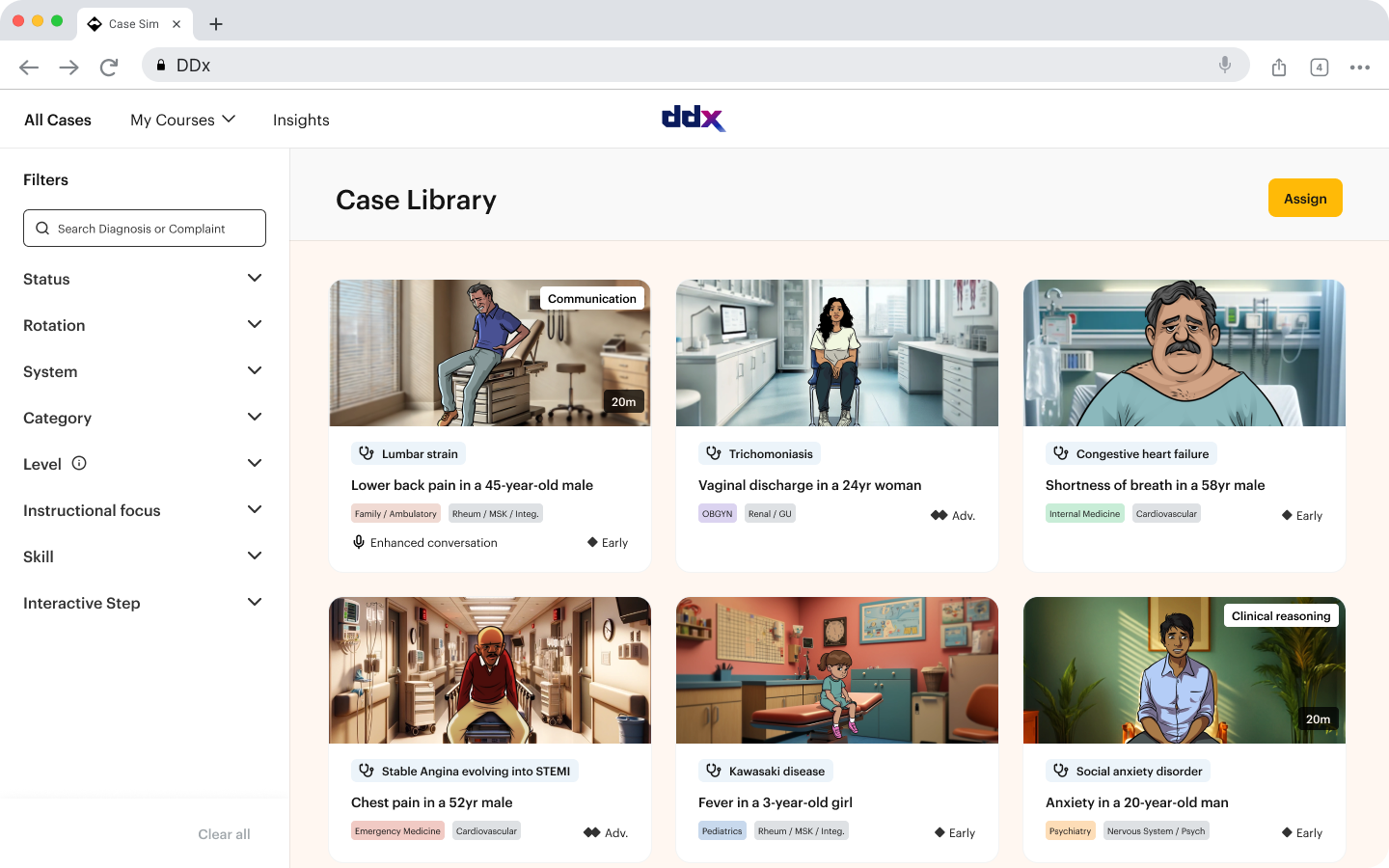

For a solution to be effective in residency training, it must give faculty early, actionable visibility into resident reasoning while providing residents safe, realistic opportunities to practice clinical decision-making. DDx by Sketchy addresses the visibility gap and burden of late-stage remediation through a scalable, case-based clinical reasoning platform that spans intern bootcamp through residency.

By standardizing exposure to exposure to high-acuity, common, and rare presentations, DDx supports longitudinal, competency-aligned assessment and makes reasoning visible early, enabling safer, more targeted intervention. Rather than adding another episodic assessment, DDx provides continuous insight into how residents think under pressure, not just the answers they reach.

For faculty

- Diagnose the “why”: DDx captures decision-making across each step of a case, allowing faculty to identify specific gaps as they arise rather than rely on anecdotal impressions. Cases are structured across three domains:

- Clinical reasoning: How residents generate, prioritize, and revise differentials as new information emerges.

- Clinical skills: Targeted practice in high-yield interpretive and applied skills, such as EKGs or imaging.

- Communication skills: Real-world interactions with patients, families, and care teams, including hand-offs and escalation conversations.

- Defensible, longitudinal documentation: Standardized cases and rubric-aligned tracking provide objective evidence of reasoning patterns to support remediation and Competency Committee decisions.

- Reduced burden: Asynchronous, targeted case practice replaces high-touch shadowing, making remediation more scalable.

For learners

- Safe space to practice: Residents manage high-stakes, time-sensitive scenarios without risk to patient safety.

- Feedback aligned with reality: Feedback focuses on how decisions are made, not just the final answer.

- Clearer expectations: Learners gain a concrete view of their strengths, gaps, and path to improvement.

Rethinking readiness as a longitudinal capability with DDx

Clinical readiness in residency is dynamic, shifting across rotations, acuity levels, and call schedules, yet episodic assessments struggle to capture this reality. DDx by Sketchy addresses this gap with a clinician-built, case-based platform aligned to the real progression of residency training.

Through interactive simulations spanning clinical reasoning, clinical skills, and communication, DDx provides a holistic view of resident performance. Longitudinal analytics and rubric-based evaluations enable early gap identification and targeted remediation.

The question for GME is no longer whether clinical reasoning matters, but how reliably programs can see it when it matters most.