Nurse practitioner (NP) education has evolved rapidly, expanding access through hybrid delivery models, flexible schedules, and pathways designed for working clinicians. These changes have strengthened the profession and broadened who can pursue advanced clinical roles.

At the same time, these shifts have surfaced a challenge many NP educators recognize: clinical reasoning remains difficult to observe early and consistently across the curriculum, highlighting the need for more standardized approaches to assessment.

Insight into how learners think remains limited outside clinical encounters, making confidence fragile, remediation harder, and faculty effort higher. While programs have introduced Objective Structured Clinical Examinations (OSCEs), simulations, and standardized patients (SPs) earlier in training, exposure and insight still vary by learner and evaluator, and these approaches are difficult and costly to scale.

With rapid advances in AI, there is a clear opportunity to address these systemic gaps. Read to explore how AI-powered, simulation-based assessment can pair standardized exposure with direct visibility into clinical reasoning.

The challenge of measuring clinical readiness

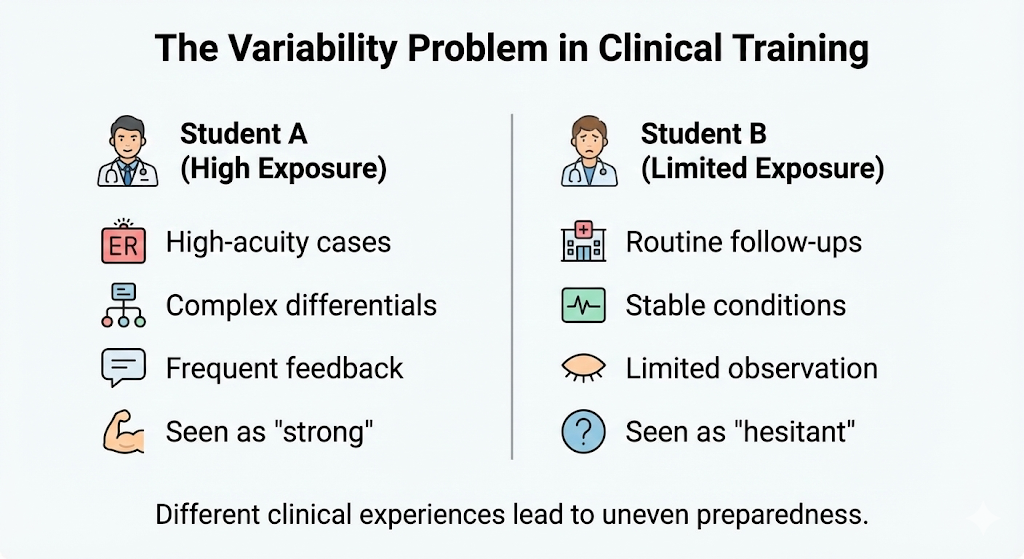

NP education faces two challenges. Clinical reasoning is most observable during rotations, yet assessment at this stage is late and inconsistent. Meanwhile, variability in clinical exposure makes it difficult to distinguish true reasoning gaps from differences in opportunity. Consequently, readiness is often inferred rather than observed, gaps surface when remediation is hardest, and faculty are left reacting instead of guiding.

1. Gaps in clinical visibility

The structure of clinical rotations limits consistent visibility into clinical reasoning.

- Learners must manage real patient care and adapt to clinical workflows

- Time pressures limit sustained observation

- Faculty and preceptors often interact with learners briefly

Once clinical responsibilities intensify, opportunities for deliberate practice and remediation narrow.

2. Variability in clinical exposure

Clinical experiences vary widely across sites, preceptors, and patient populations. Because students often secure their own preceptors, training quality and diagnostic exposure vary widely across learners.

This variability complicates assessment.

- Apparent gaps may reflect exposure differences, not reasoning ability

- Narrative evaluations are difficult to compare

- Programs lack shared benchmarks to assess readiness consistently across cohorts

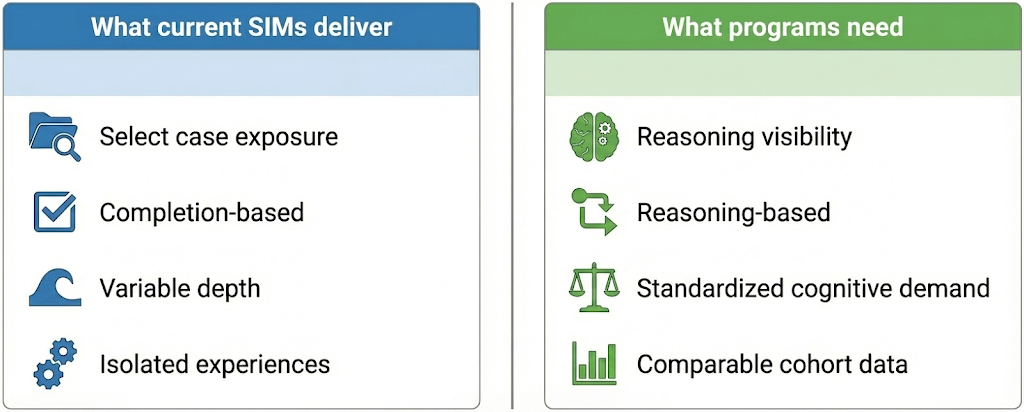

Why current NP simulation solutions haven’t solved this

Most NP programs already use simulation tools such as virtual patients, OSCEs, and AI-driven case platforms to increase exposure and surface gaps earlier. Yet, they still don’t provide early, comparable insight into clinical reasoning, nor a standardized way to integrate simulation into the curriculum.

Traditional OSCEs

Traditional OSCEs offer episodic snapshots of performance but are costly (up to $900 per student) and difficult to scale.

In practice, this results in:

- Infrequent assessment due to cost, staffing, and logistical constraints

- Variable feedback quality across evaluators

- Limited ability to track reasoning development over time

- Difficulty building learner confidence and comparing readiness at scale

Virtual simulations

Most simulation platforms are designed to support case-based learning and skill acquisition, not to make clinical reasoning visible, comparable, or trackable over time.

What programs are getting today:

- Select simulated cases

- Inconsistent case depth and complexity

- Variable feedback quality

- Limited insight into how learners think over time

The challenge is no longer access to simulation. It is the lack of standardized, scalable approaches that make clinical reasoning visible without adding faculty burden.

DDx: A standardized, scalable alternative

The emergence of AI presents a powerful opportunity for NP programs to close the clinical reasoning training gap – especially for hybrid and online programs with fewer opportunities for in-person training. Just as importantly, AI-enabled simulation supports how NPs are trained to practice by emphasizing continuity of care, clinical judgment over time, and patient-centered decision-making across settings. What would have historically been impossible is becoming approachable and will soon be the norm, allowing programs to reduce the time, cost, and burden tied to late-stage remediation.

Faculty can now deliver on fundamental goals:

- Deliver high-fidelity, multi-role simulations that truly reflect real-world clinical practice in a safe environment

- Expose students’ clinical reasoning across the full patient encounter across a wide range of cases, from history taking through diagnosis and management

- Deliver OSCE-equivalent simulations at up to 80% lower cost than standardized patient–based assessments, making large-scale adoption financially feasible

- Support standardized, longitudinal assessment of clinical reasoning across learners

- Generate data that distinguishes clinical exposure from true readiness and enables early, on-target remediation

With DDx:

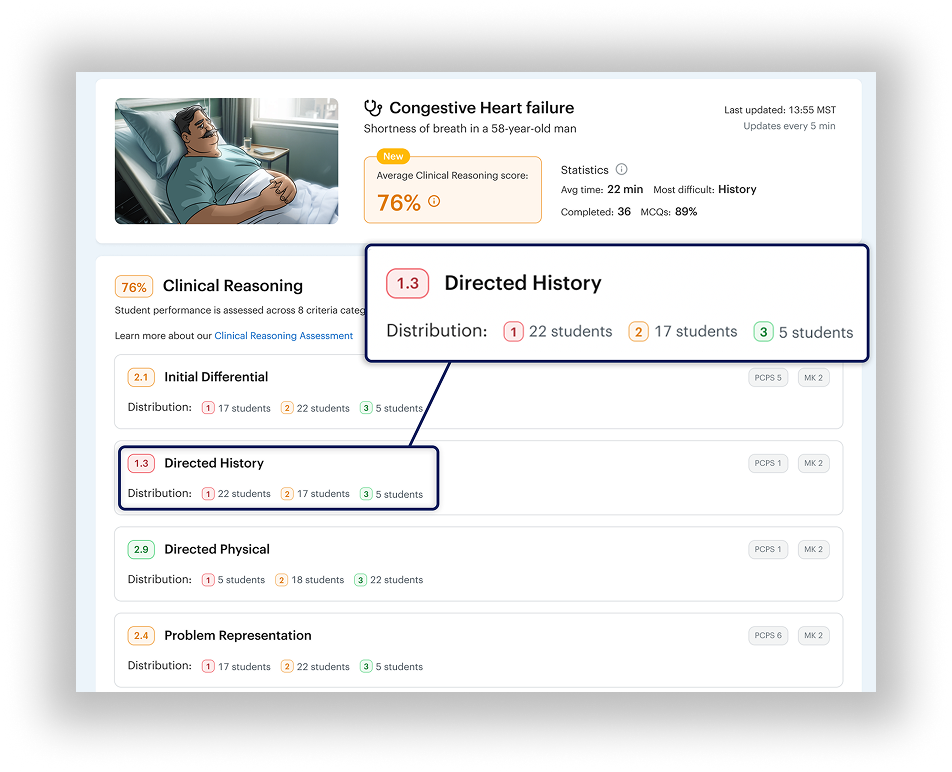

DDx captures how learners evaluate patients over time, integrate evolving information, and adjust decisions in context, reflecting the continuity of care central to NP practice. Because NP care is inherently patient-centered, DDx makes the reasoning behind clinical choices visible, not just the final answer.

- Every learner encounters comparable diagnostic uncertainty while reasoning through patient care over time, not just a single decision point

- Core and rare conditions relevant to NP scope and population focus are guaranteed, not left to chance

- Clinical reasoning is captured across the full encounter, making patient-centered judgment, prioritization, and decision-making visible, not inferred

And for faculty, they get key benefits without increasing costs or faculty workload. They are able to:

- Gain early, comparable insight into clinical readiness

- Scale reasoning training to large cohorts

- Provide targeted support instead of broad remediation

- Get access to extensive case flexibility and customization across a broad range of clinical scenarios

Over time, comparable assessment data also reveals cohort-level trends, enabling programs to address systemic gaps proactively. Effort and cost can be partially shifted earlier in the curriculum, where gradual intervention is more effective and less disruptive, while also strengthening accreditation and continuous quality improvement.

Simulation does not replace patient care experiences, but it prepares learners to engage with clinical environments more effectively by ensuring that foundational reasoning skills are developed, observed, and supported before stakes are highest.

Building a more predictable path to readiness

Variability in clinical exposure will remain a defining feature of modern NP education. What programs can control is whether that variability obscures readiness or is balanced by consistent insight into how learners think.

DDx addresses this gap by standardizing how clinical reasoning is assessed. This ensures every learner encounters comparable diagnostic complexity, making reasoning visible and comparable regardless of clinical site or preceptor.

Because DDx cases are scalable and repeatable:

- Programs gain visibility without increasing reliance on resource-intensive standardized patient encounters

- Faculty receive early, comparable data that supports targeted guidance rather than late-stage remediation, using a dedicated Clinical Reasoning Assessment tool

- Learners benefit from meaningful case exposure that strengthens clinical judgment before entering high-stakes environments

The goal is not to replace clinical training or existing simulation investments. With DDx, programs can move beyond counting cases and begin measuring thinking, developing readiness deliberately, evaluating it consistently, and supporting it at scale.